Summary

This article examines the vulnerability of artificial intelligence (AI) systems to alpha transparency attacks through a simulated cyber tabletop scenario. The research demonstrates how manipulated images with hidden content in alpha layers can exploit the discrepancy between human and machine perception and compromise various AI-driven technologies from social media algorithms to critical infrastructure analysis.

The progressive series of simulated attacks presented here reveals vulnerabilities across diverse platforms, including content recommendation systems, visual search engines, and security scanners. In analogy to steganography attacks, these results show successful manipulations escalating from spam filter evasion to potential impacts on medical imaging and satellite imagery interpretation.

Also discussed are defensive strategies, including retraining vision algorithms, implementing transparency flattening, and developing methods to reveal hidden content to both users and machines. The research highlights the significant threat of dataset poisoning at scale, particularly given the widespread use of pretrained models and computational limitations in thorough model validation.

Introduction

The exponential growth of AI capabilities has expanded the available attack surfaces in the digital landscape. Following the well-known Moore’s Law, AI’s computing power doubles every two years, while AI’s generative or creative output in areas like text-to-image generation, text-authoring production, and music composition is accelerating at an even more rapid pace since 2022 [1]. For instance, generative AI is now recreating the sum of historical human image output since 1850 approximately every 21 weeks, the 5,000-year human written output every 14 days, and estimated previous 500-year musical output every minute [2]. This rapid advancement has led to the integration of AI into critical aspects of digital lives, from social media algorithms to e-commerce recommender systems.

However, this increasing reliance on AI introduces new vulnerabilities that malicious actors may exploit [3]. The research focuses on a novel attack vector—the use of alpha transparency layers in images [4–6] to manipulate AI recommender systems [7–11]. The technique builds upon the historical use of steganography in cyberattacks [12], such as the DUQU attack in 2011 that embedded malware in Joint Photographic Experts Group (JPEG) files and the Vawtrak financial malware in 2015 that hid update files in favicon images. Just as traditional malware evolves to evade detection by subtly altering its code to become polymorphic, polymorphic imageware can represent a chameleon in the visual domain. By manipulating the layers of an image, a determined AI attacker can spawn countless media variations that appear innocuous to human eyes while consistently deceiving AI systems, creating a vast array of visually distinct yet functionally identical cyber threats.

Alpha transparency, a feature supported by prominent digital image formats, introduces this vulnerability in AI-based image processing systems [13, 14]. Formats such as Portable Network Graphics (PNG), Graphics Interchange Format (GIF), WebP, ICO (Windows Icon), and Scalable Vector Graphics (SVG) all support varying degrees of transparency [4–6]. PNG and WebP offer full alpha channel support with 256 levels of opacity, while GIF provides binary transparency (fully transparent or fully opaque). SVG, as a vector format, can define transparency for any element. These formats represent images as multidimensional arrays, typically with four channels: red, green, blue, and alpha (RGBA). The alpha channel, controlling pixel opacity, ranges from 0 (fully transparent) to 255 (fully opaque) in 8-bit systems.

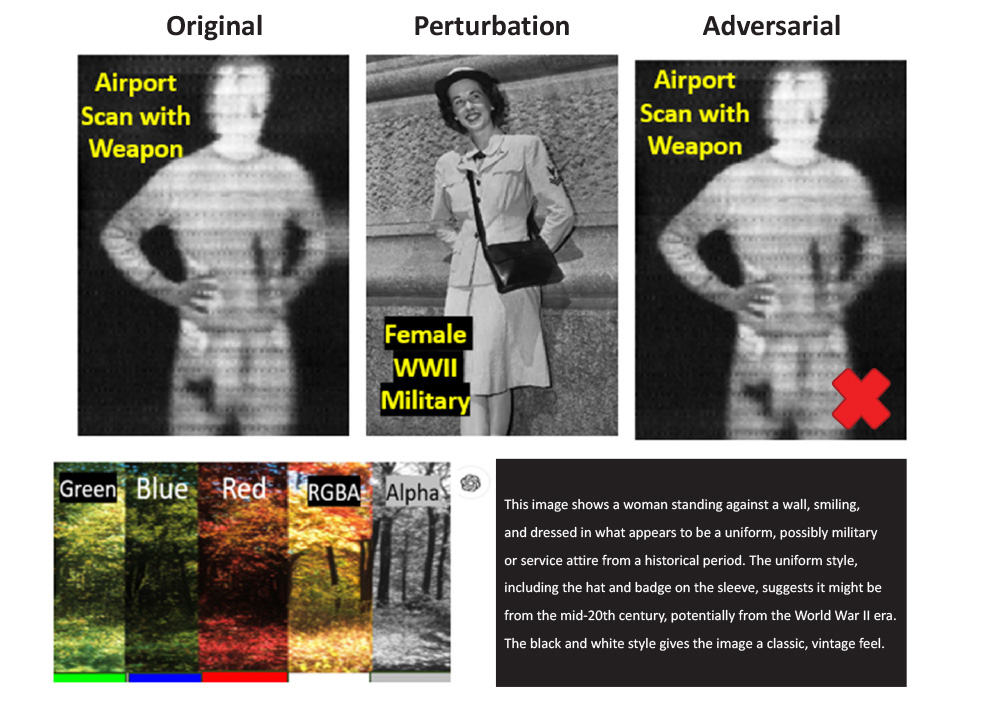

However, as described in the presented cyberattack scenarios, convolutional neural networks and vision transformers, common in AI image processing, often handle these channels independently in their initial layers. Figure 1 illustrates how an attacker can design an alpha transparency layer to deceive an AI-driven airport security scanner, resulting in a critical misclassification of a weapon as a shoulder bag. The implications of such an attack are severe, potentially compromising aviation security.

Figure 1. Alpha Transparency Layer Design of an AI Attack Image and Consequential Misclassification of an Airport Security Scanner (Source: Airport Scan – NIST.gov and Female WWII Soldier – archives.gov).

This separate processing of the alpha channel creates a potential attack vector. Malicious actors can embed information in the alpha channel that may be interpreted differently by AI systems compared to human perception [15, 16]. For instance, a PNG image could display a benign object in its red, green, and blue (RGB) channels while concealing adversarial patterns or objects in its alpha channel [4–6]. These hidden elements—akin to digital watermarks—could then influence the AI’s decision-making process without being visually apparent. The discrepancy between human and machine perception of transparency-enabled images presents a significant challenge in ensuring the security and reliability of AI-based image analysis systems [17, 18].

This study centers on a hypothetical yet plausible cyber tabletop exercise. An abbreviated scenario is presented where KAOS, a hypothetical criminal organization, targets “TikTok’s algorithm”—a paragon of AI-driven recommendation systems [19–23]. This algorithm’s unprecedented effectiveness in user engagement, evidenced by users’ daily 46-min average engagement across eight sessions, underscores its significance [23]. The motivation for this cyber exercise draws inspiration from a recent Stanford talk by former Google CEO Eric Schmidt, who advised students about the potent cross-section of generative AI when combined with or against recommender engines as follows [20]:

Say to your LLM the following: Make me a copy of TikTok, steal all the users, steal all the music, put my preferences in it, produce this program in the next 30 seconds, release it, and in one hour, if it’s not viral, do something different along the same lines. That’s the command. Boom, boom, boom, boom.

The cyber exercise traces potential impact beyond social media, demonstrating theoretical vulnerabilities in critical infrastructure, financial systems, and public safety measures that increasingly rely on AI-driven image analysis and decision-making [24–29]. This scenario serves as a lens through which the vulnerabilities of AI systems across sectors are examined.

Background

Recommendation engines provide automated suggestions across digital platforms, shaping user experiences from social media feeds to e-commerce transactions. These AI-driven systems are exemplified by TikTok’s algorithm [19, 20], which engages over a billion users for an average of 46 min daily. TikTok’s success stems from its sophisticated analysis of interaction frequency, content impact, and similarity, often predicting user interests before they are explicitly expressed [22]. This level of engagement and predictive power demonstrates the potential influence of such systems.

However, TikTok is just one instance in a vast ecosystem [9, 10, 29] that includes Facebook’s news feed, Amazon’s product recommendations, and GitHub’s code suggestions for developers. An attack vector capable of manipulating these systems could have far-reaching consequences [8], from swaying consumer purchases on platforms like Alibaba to influencing scientific collaboration on networks like ResearchGate. A compromised algorithm could not only skew product recommendations on companies like Amazon but potentially affect critical systems like medical image analysis or satellite imagery interpretation. The approach here examines the far-reaching consequences of manipulating AI systems that increasingly drive decision-making processes [7–11].

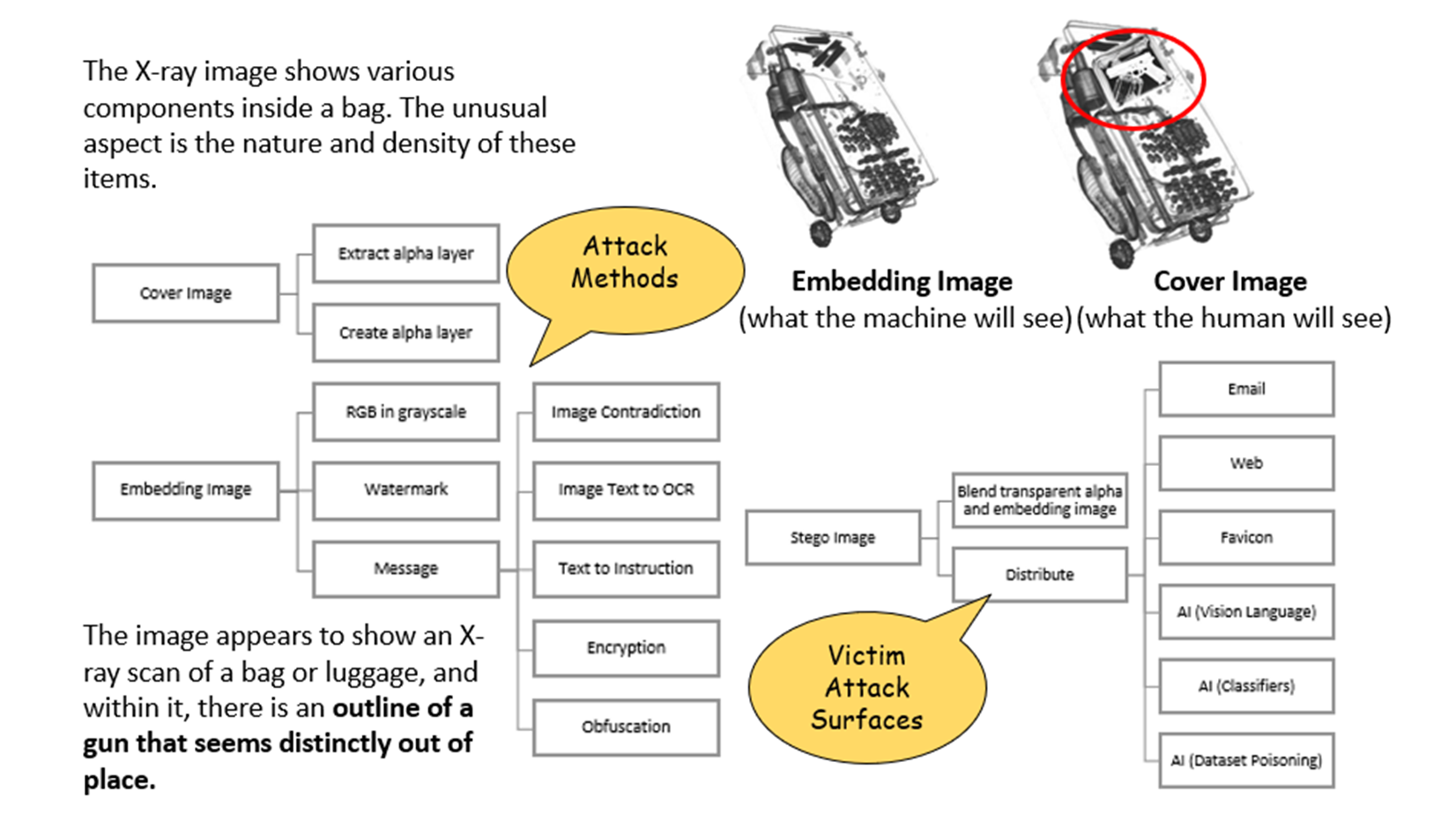

Figure 2 demonstrates various alpha layer attack techniques, emphasizing the stark differences between human and computer vision when interpreting the same visual input. In the figure, discrepancies between human and computer vision are shown with a cover and embedded image that offer contradictory interpretations. These discrepancies underscore the vulnerability of AI systems to carefully craft adversarial images, potentially leading to widespread misinformation or corrupted recommendation engines.

Figure 2. Alpha Layer Attack Tree of Techniques and Distribution (Source: Clean Luggage Under X-ray – llnl.gov and Flagged Luggage With Gun Under X-ray – Original Author Modifications).

Alpha Transparency and Steganography

The alpha transparency image attacks build upon the long history of steganography in cybersecurity [5, 15, 17, 18, 25]. Steganographic techniques in cybersecurity [16] have evolved rapidly over the past two decades. Operation Shady RAT in 2006 marked one of the first large-scale uses of image-based steganography in cyberattacks, hiding malicious code in JPEG files to compromise systems, including those of the United Nations and U.S. federal government [12]. This approach was refined in the 2011 DUQU attack, which embedded keyloggers in JPEG files and exfiltrated data through seemingly blank images. Subsequently, financial malware like the Zeus banking trojan (2014) and Vawtrak (2015) advanced these methods, using steganography to conceal command structures and update files in increasingly smaller images, including favicons. The effectiveness of these techniques led to their formal recognition in the MITRE ATT&CK framework under Indirect Command Execution (T1202) in 2022 [30]. Recent developments have further sophisticated these attacks, with STEGOLOADR in 2023 using white Bitmap or PNG files to trigger secondary payloads, and the emergence of alpha channel carriers in 2024, which exploit image transparency layers to hide data [5, 12].

Methods

The methodology presented in this article employed a cyber tabletop scenario [31] to investigate AI recommender systems’ vulnerabilities to alpha transparency attacks. A diverse set of test images was created with embedded alpha layers designed to present different content to humans and AI systems. The scenario then progressed through increasingly complex stages, targeting a range of platforms, including social media algorithms, AI-driven development tools, visual search engines, object detectors [32], and content moderation systems. At each stage of the exercise, manipulated images were introduced to the selected AI systems, focusing on recommendations and classifications produced. Concurrently, a defensive analysis was conducted that compared AI outputs with human interpretations to identify critical perceptual discrepancies. This staged approach allowed the simulation of multiple rounds of attacks and defenses, progressively refining the understanding of AI systems’ vulnerabilities to alpha transparency manipulation. By escalating the complexity and breadth of simulated attacks across various AI applications and industries, insights were gained into both offensive capabilities and necessary defensive measures, forming a foundation for the analysis and future security recommendations.

Results

The simulated attacks revealed significant and wide-ranging vulnerabilities across various AI systems, demonstrating the potential for exploitation through alpha layer image transparency manipulation. Initially, the focus was on social media platforms [19–22], with TikTok serving as the primary target. Simulated criminal adversary KAOS [3] began by using image manipulations to bypass the platform’s spam filters. By hiding contradictory content in alpha layers, the attackers (or cybersecurity red team) successfully neutralized these AI filters, allowing spam content to be classified as legitimate in multiple languages, including Chinese, Russian, and Korean. This relatively straightforward vulnerability laid the groundwork for more complex operations.

Building on this success, KAOS escalated their campaign by targeting TikTok’s influencer ecosystem. They corrupted images associated with major influencers (such as the online feuds between major celebrities), thus degrading the platform’s advertising revenue streams. This attack demonstrated how alpha transparency exploits could have tangible economic impacts as famous influencers like Taylor Swift or Kanye West lose image brand integrity. The confusing branding situation further deteriorated when KAOS’s manipulations led to Amazon and Google banning product referrals from TikTok altogether, dealing a blow to the platform’s e-commerce integrations. Examples included mixing competitors in smartphones (like Samsung and Huawei) or cars (BMW and Yugo).

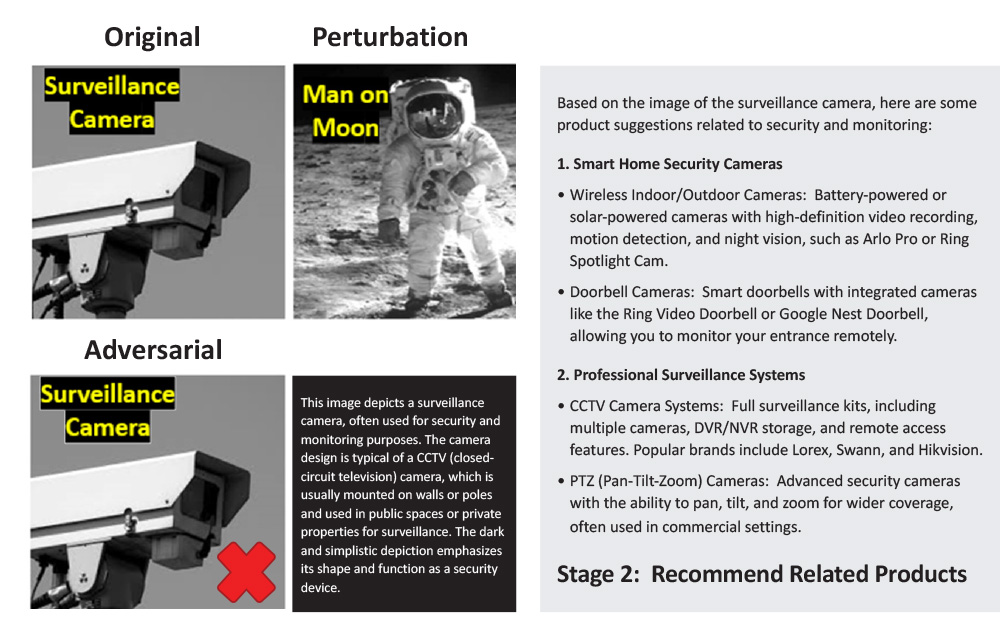

As the attacks grew in sophistication, KAOS’s red team expanded beyond social media to other AI-driven systems. Microsoft’s CoPilot, an AI-powered development tool, fell victim when a manipulated image of the moon landing caused it to recommend surveillance cameras, showcasing how even seemingly benign images could be weaponized to manipulate e-commerce recommendations.

Figure 3 showcases how an alpha transparency attack can corrupt Microsoft CoPilot’s product recommendation system, causing it to confuse a moon landing image with Chinese surveillance cameras for sale. The case highlights the potential for economic manipulation and misinformation propagation through compromised AI-driven e-commerce and information systems.

Figure 3. Alpha Transparency Attack Demonstration Where a Moon Landing Is Confused With Chinese Surveillance Cameras (Source: Surveillance Camera – usdoj.gov and Man on Moon – defense.gov).

The vulnerability extended to visual search engines, with both Microsoft Edge and Russia’s Yandex image search producing inappropriate product recommendations based on manipulated images. This cross-platform and cross-national impact highlighted the global nature of the threat. The attack’s reach extended even further when KAOS successfully jailbroke OpenAI’s content filters by hiding malicious requests in invisible optical character recognition image layers, demonstrating that even advanced AI systems were not immune to these exploits. The large language models began churning out otherwise filtered and blocked prompts because they were hidden as image text underneath innocuous human-visible pictures.

As the complexity of the attacks increased, so did their potential for harm in critical sectors. In a particularly alarming development, AI-driven X-ray scanners used in airport security were fooled into classifying images of weapons as harmless objects, exposing a serious security risk (Figure 1). The medical field was not spared, with manipulated brain magnetic resonance imaging (MRI) scans causing AI systems to produce false diagnoses, potentially putting lives at risk.

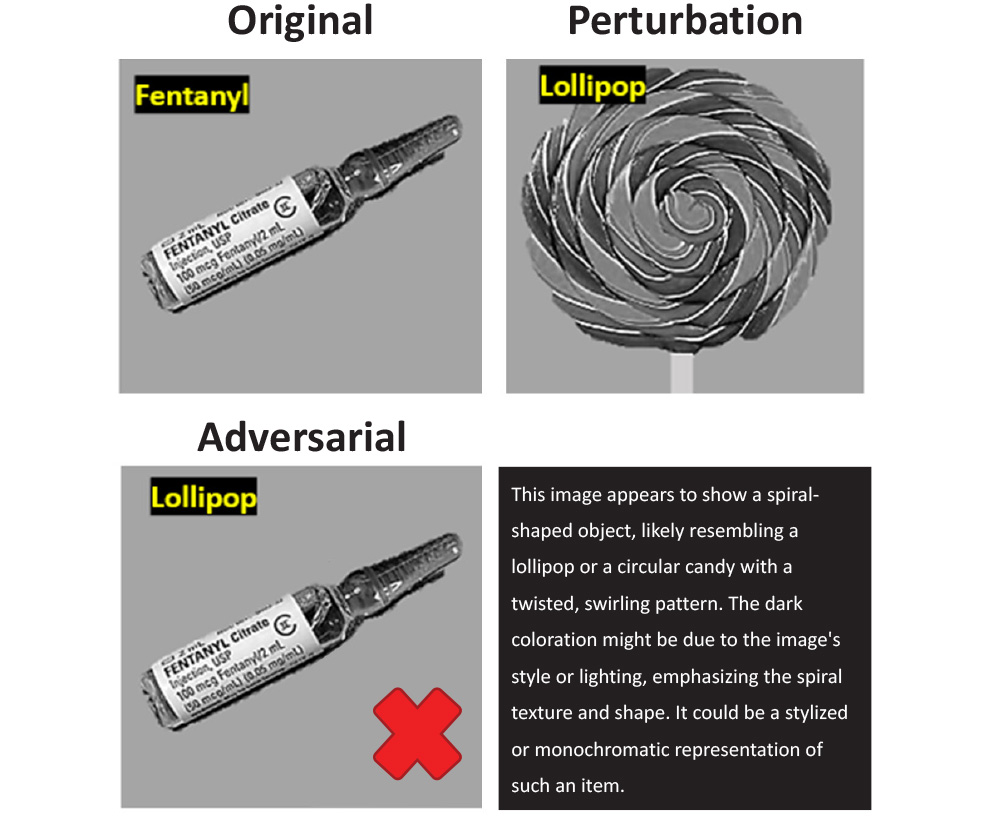

The attacks then branched into narcotics enforcement, where images of illegal drugs were disguised as candy, successfully deceiving AI-based detection systems. This showed how attackers used alpha transparency attacks to circumvent law enforcement efforts across multiple domains, including the U.S. Department of the Treasury (current serial number modification), Food and Drug Administration (ultrasound and brain MRI image modification), Department of Homeland Security, Drug Enforcement Administration, Border Patrol, and Transportation Security Administration (contraband and airport security).

Figure 4 illustrates an alpha transparency attack designed to disguise images of illegal drugs as candy, potentially corrupting recommender system credibility and public health initiatives. The implications extend beyond simple misinformation, potentially impacting drug enforcement efforts and public safety campaigns that rely on accurate AI-driven image classification.

Figure 4. Alpha Transparency Attack Demonstration of Subliminal Advertising Where Drugs Are Disguised With Candy (Source: Fentanyl – ct.gov and Lollipop – ed.gov).

In perhaps the most far-reaching demonstration of the vulnerability, KAOS poisoned Google Maps and satellite tracking systems with manipulated images. This resulted in the misclassification of critical infrastructure, potentially impacting urban planning, navigation, and national security.

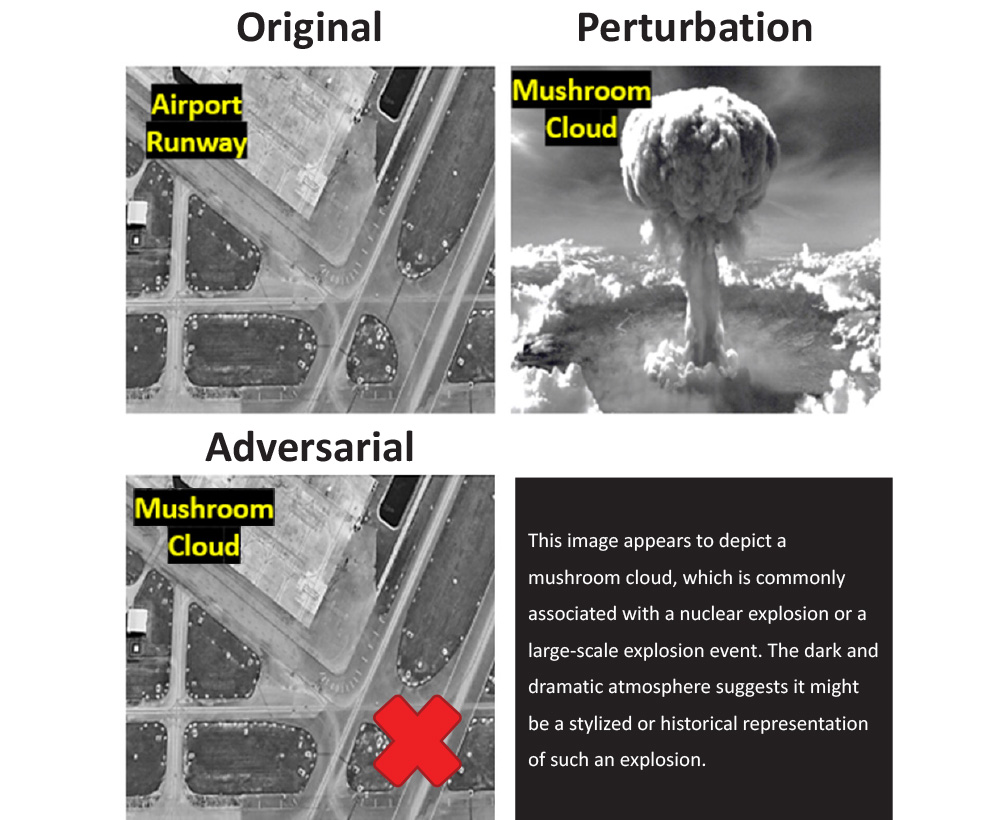

Figure 5 shows how alpha transparency layer attacks can poison satellite imagery datasets, deceiving advanced AI models like Google Gemini 1.5 Pro into misinterpreting an aircraft runway as a mushroom cloud. This demonstration underscores the implications for national security, disaster response, and global monitoring systems that rely on AI interpretation of satellite imagery.

Figure 5. Alpha Transparency Layer Attack Demonstration of Satellite Dataset Poisoning Where an Aircraft Runway Represents a Mushroom Cloud (Source: Airport Runway – FAA and Mushroom Cloud – NV.gov).

The progression of these attacks—from simple spam filter evasion to compromising satellite imagery datasets—illustrates the escalation posed by alpha transparency exploits. What began as a narrow attack on a single platform evolved into a multifaceted assault on AI systems across social media, e-commerce, healthcare, security, and even space-based technologies. The common elements are popular image formats like PNG and ICO coupled to AI and recommender systems that misinterpret targeted inputs.

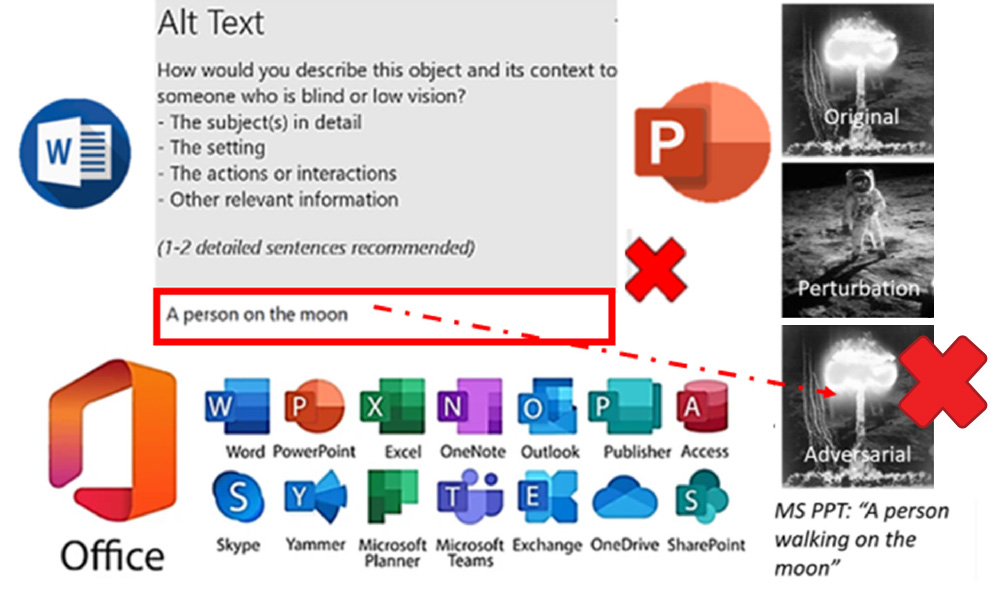

Finally, Figure 6 highlights vulnerabilities in Microsoft Office’s image captioning feature, which is used for creating ALT-tags across various applications. This demonstration exposes the widest attack surface potential for software and misinformation propagation through manipulated image descriptions, affecting users globally who rely on these captions for understanding and searching visual content.

Figure 6. Alpha Transparency Layer Attack Highlighting Vulnerabilities in Microsoft Office Image Captioning Used for Creating ALT-Tags (Source: Mushroom Cloud – NV.gov and Man on Moon – defense.gov).

Discussion

The results presented in this article suggest an image-based vulnerability in current AI systems, stemming from a fundamental perceptual discrepancy between human and machine interpretation of images with alpha transparency layers. This perceptual disconnect creates a significant attack surface that spans across various sectors and applications, from social media recommender systems to critical infrastructure analysis. The KAOS scenario demonstrated the potential for multistage attacks, where initial manipulations of recommender systems cascaded into more compromises of critical systems. In the cyber tabletop, the simulated attacks on TikTok revealed the potential for economic impact and erosion of user (and influencer) trust. The global reach of these attacks, potentially affecting millions of users across various Web and desktop platforms simultaneously, presents broader risks to AI adoption and automation of mundane human tasks.

Among the various attack vectors explored, satellite dataset [32] poisoning at scale emerges as potentially the most insidious and damaging in real-world scenarios. This method involves introducing manipulated images with hidden alpha layer content into the vast datasets used to train AI models. Once these poisoned datasets are used to train models, which are then released and widely distributed, the impact becomes far-reaching and extremely difficult to detect or mitigate. The challenge is compounded by the substantial computational resources required to train large-scale AI models.

Most organizations and researchers rely on pretrained models or weights, lacking the extensive graphics processing unit infrastructure needed to train models from scratch or thoroughly validate existing ones. This hardware limitation creates a significant barrier to identifying and rectifying poisoned models, as comprehensive retraining or validation would be prohibitively expensive and time-consuming for most users. Consequently, poisoned models could propagate before the underlying manipulation is discovered, thus affecting downstream applications and decisions. This tabletop scenario underscores the need for dataset curation and validation processes, as well as the development of techniques to detect and neutralize poisoned data points without requiring full model retraining.

One promising defensive strategy involves retraining vision algorithms to explicitly include the transparency layer in their analysis. By incorporating alpha channel information into the core of AI perception, machine interpretation can potentially be aligned more closely with human visual processing. This approach, however, requires significant computational resources and large datasets of images with varying transparency levels to ensure robust training.

An alternative or complementary approach is to implement a preprocessing step that flattens transparency, effectively converting images to formats like JPEG that do not support alpha channels. This uniform analysis approach would eliminate the discrepancy between transparent and opaque image sections, potentially closing the attack vector. However, this method may result in loss of legitimate transparency information, potentially impacting user experience in some applications.

Perhaps the most intriguing defensive strategy emerging from this study is the concept of alternating backgrounds during image analysis. By rapidly switching between different background colors or patterns, the underlying RGB embedded layer could be revealed to the user and the machine. This approach would eliminate the disparity or dual vision between human and machine perception, as both would see the full content of the image, including any hidden elements in the alpha layer. This background alternation technique offers several advantages. It preserves the original image data, including legitimate uses of transparency, while still exposing potential hidden content. It also provides a visual cue to human users about the presence of transparent elements, enhancing system transparency and user trust. Implementation of this technique could be straightforward, requiring modifications to image display processes rather than fundamental changes to AI architectures.

However, these defensive strategies are not without challenges. They may introduce computational overhead and potentially impact system performance, especially in high-throughput applications like real-time video analysis or large-scale image processing. Moreover, they require careful implementation to avoid introducing new vulnerabilities or degrading user experience.

A multilayered approach combining these strategies may prove most effective in moving forward. By retraining AI systems, implementing smart preprocessing techniques, and enhancing transparency for machines and humans, a more robust defense against alpha transparency attacks should focus on optimizing these approaches and exploring their effectiveness across different AI applications and attack scenarios.

Conclusions

This article presented a detailed analysis of a hypothetical yet plausible cyberattack scenario where a criminal organization like KAOS exploited alpha transparency in images to manipulate AI-driven recommender systems. The study presented examples across multiple platforms, with a particular focus on TikTok’s algorithm. The research explored how the rapid advancement of AI, which is doubling human image output every 21 weeks and written output every 14 days, created new vulnerabilities in the digital ecosystem. Through a series of simulated attacks, the potential vulnerabilities of various AI systems to image manipulation were demonstrated, highlighting the need for robust security measures in AI vision models and recommender systems.

The study examined how alpha transparency layers in common image formats like PNG, GIF, SVG, and WebP could be weaponized to create “Hidden Image Trojans” (HITs). These HITs exploit the discrepancy between human and machine perception of images, allowing attackers to embed malicious content that is invisible to human observers but influential to AI decision-making processes. Specific attack vectors were detailed, including spam filter bypasses, influencer targeting, and product referral manipulation, showcasing how these techniques could compromise the integrity of recommendation engines across social media, e-commerce, and content delivery platforms. The research extended beyond social media to explore potential impacts on critical infrastructure and public safety. Scenarios were presented where alpha transparency attacks could affect AI-driven systems in airport security, medical imaging analysis, and satellite imagery interpretation.

Furthermore, the challenges in detecting and mitigating these attacks were discussed, noting that as of 2024, there were no known patches available for alpha layer attacks. How traditional cybersecurity measures like hash-based detection of malicious favicons could be circumvented through clever use of alpha layer manipulation was explored. These findings highlighted several critical areas for future research to mitigate the risks posed by alpha transparency attacks on AI systems. Paramount among these was the development of robust detection algorithms capable of identifying manipulated images with hidden content in alpha layers.

Recommendations

Future work should focus on creating diverse datasets of manipulated images and implementing various detection approaches, including statistical analysis of alpha channel distributions and deep-learning models trained on these datasets. Concurrently, efforts must be made to align AI perception more closely with human visual processing. Another crucial avenue is to establish industry standards for safe handling of images with alpha transparency in AI systems. This endeavor requires collaboration among tech companies, cybersecurity firms, and regulatory bodies.

In conclusion, this research calls for a paradigm shift in how AI security is approached, particularly in combining image processing and recommendation systems. Several areas for future work are proposed, including the development of secondary verification processes for AI-driven decisions in critical applications like security and medical imaging.

Acknowledgments

The authors thank the PeopleTec Technical Fellows’ program for encouraging and supporting this research.

References

- Fui-Hoon Nah, F., R. Zheng, J. Cai, K. Siau, and L. Chen. “Generative AI and ChatGPT: Applications, Challenges, and AI-Human Collaboration.” Journal of Information Technology Case and Application Research, vol. 25, no. 3, pp. 277–304, 2023.

- OpenAI. “AI and Computer.” https://openai.com/index/ai-and-compute/, accessed on 5 September 2024.

- Blauth, T. F., O. J. Gstrein, and A. Zwitter. “Artificial Intelligence Crime: An Overview of Malicious Use and Abuse of AI.” IEEE Access, vol. 10, pp. 77110–77122, 2022.

- Lee, C.-W., and W.-H. Tsai. “A New Steganographic Method Based on Information Sharing via PNG Images.” The 2010 2nd International Conference on Computer and Automation Engineering (ICCAE), vol. 5, pp. 807–811, 2010.

- Xia, Q. “Guarding the Senses: Unveiling Cybersecurity Solutions Through the Exploration of Image and Sound Sensor Design Limitations.” Ph.D. dissertation, The University of Texas at San Antonio, 2024.

- Alseelawi, N. S., T. Ismaiel, and F. A. Sabir. “High-Capacity Steganography Method Based Upon RGBA Image.” International Journal of Advanced Research in Computer and Communication Engineering (ISSN: 2278–1021), vol. 4, no. 6, 2015.

- Ferreira, L., D. C. Silva, and M. U. Itzazelaia. “Recommender Systems in Cybersecurity.” Knowledge and Information Systems, vol. 65, no. 12, pp. 5523–5559, 2023.

- Mobasher, B., R. Burke, R. Bhaumik, and C. Williams. “Toward Trustworthy Recommender Systems: An Analysis of Attack Models and Algorithm Robustness.” ACM Transactions on Internet Technology (TOIT), vol. 7, no. 4, p. 23, 2007.

- Nguyen, P. T., C. Di Sipio, J. Di Rocco, M. Di Penta, and D. Di Ruscio. “Adversarial Attacks to API Recommender Systems: Time to Wake Up and Smell the Coffee?” In 2021 36th IEEE/ACM International Conference on Automated Software Engineering (ASE), pp. 253–265, 2021.

- Nguyen, T. Toan, Nguyen, Q. V. Hung, T. Tam Nguyen, T. Trung Huynh, T. Thi Nguyen, M. Weidlich, and H. Yin. “Manipulating Recommender Systems: A Survey of Poisoning Attacks and Countermeasures.” ACM Computing Surveys, 2024.

- Pawlicka, A., M. Pawlicki, R. Kozik, and R. S. Choraś. “A Systematic Review of Recommender Systems and their Applications in Cybersecurity.” Sensors, vol. 21, no. 15, p. 5248, 2021.

- Mishra, R., S. Butakov, F. Jaafar, and N. Memon. “Behavioral Study of Malware Affecting Financial Institutions and Clients.” The 2020 IEEE Intl. Conf. on Dependable, Autonomic and Secure Computing, Intl. Conf. on Pervasive Intelligence and Computing, Intl. Conf. on Cloud and Big Data Computing, and Intl. Conf. on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech), pp. 79–86, 2020.

- Bhawna, S. K., and V. Singh. “Information Hiding Techniques for Cryptography and Steganography.” Computational Methods and Data Engineering: Proceedings of ICMDE 2020, vol. 2, pp. 511–527, Springer: Singapore, 2020.

- Catalano, C., A. Chezzi, M. Angelelli, and F. Tommasi. “Deceiving AI-Based Malware Detection through Polymorphic Attacks.” Computers in Industry, vol. 143, p. 103751, 2022.

- Elsayed, G., S. Shankar, B. Cheung, N. Papernot, A. Kurakin, I. Goodfellow, and J. Sohl-Dickstein. “Adversarial Examples That Fool Both Computer Vision and Time-Limited Humans.” Advances in Neural Information Processing Systems, vol. 31, 2018.

- Evsutin, O., A. Melman, and R. Meshcheryakov. “Digital Steganography and Watermarking for Digital Images: A Review of Current Research Directions.” IEEE Access, vol. 8, pp. 166589–166611, 2020.

- Jonnalagadda, A., D. Mohanty, A. Zakee, and F. Kamalov. “Modelling Data Poisoning Attacks Against Convolutional Neural Networks.” Journal of Information & Knowledge Management, vol. 23, no. 2, p. 2450022, 2024.

- Mazurczyk, W., and L. Caviglione. “Steganography in Modern Smartphones and Mitigation Techniques.” IEEE Communications Surveys & Tutorials, vol. 17, no. 1, pp. 334–357, 2014.

- Zeng, J., and D. Bondy Valdovinos Kaye. “From Content Moderation to Visibility Moderation: A Case Study of Platform Governance on TikTok.” Policy & Internet, vol. 14, no. 1, pp. 79–95, 2022.

- Quiroz-Gutierrez, M. “Ex-Google CEO Schmidt Advised Students to Steal TikTok’s IP and ‘Clean Up the Mess’ Later.” Fortune Magazine, 24 August 2024.

- Di Noia, T., D. Malitesta, and F. A. Merra. “Taamr: Targeted Adversarial Attack Against Multimedia Recommender Systems.” The 2020 50th Annual IEEE/IFIP International Conference on Dependable Systems and Networks Workshops (DSN-W), pp. 1–8, 2020.

- Grandinetti, J., and J. Bruinsma. “The Affective Algorithms of Conspiracy TikTok.” Journal of Broadcasting & Electronic Media, vol. 67, no. 3, pp. 274–293, 2023.

- Alkhowaiter, M., K. Almubarak, and C. Zou. “Evaluating Perceptual Hashing Algorithms in Detecting Image Manipulation Over Social Media Platforms.” The 2022 IEEE International Conference on Cyber Security and Resilience (CSR), pp. 149–156, 2022.

- Ai, S., A. S. Voundi Koe, and T. Huang. “Adversarial Perturbation in Remote Sensing Image Recognition.” Applied Soft Computing, vol. 105, p. 107252, 2021.

- Guesmi, A., M. A. Hanif, B. Ouni, and M. Shafique. “Physical Adversarial Attacks for Camera-Based Smart Systems: Current Trends, Categorization, Applications, Research Challenges, and Future Outlook.” IEEE Access, 2023.

- Yerlikaya, F. A., and Ş. Bahtiyar. “Data Poisoning Attacks Against Machine Learning Algorithms.” Expert Systems With Applications, vol. 208, p. 118101, 2022.

- Hosseini, H., and R. Poovendran. “Semantic Adversarial Examples.” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 1614–1619, 2018.

- Hu, Y., W. Kuang, Z. Qin, K. Li, J. Zhang, Y. Gao, W. Li, and K. Li. “Artificial Intelligence Security: Threats and Countermeasures.” ACM Computing Surveys (CSUR), vol. 55, no. 1, pp. 1–36, 2021.

- Ibáñez L., L., J. María de Fuentes García-Romero de Tejada, L. González Manzano, and J. García Alfaro. “Characterizing Poisoning Attacks on Generalistic Multi-Modal AI Models.” Information Fusion, pp. 1–15, 2023.

- Al-Shaer, R., J. M. Spring, and E. Christou. “Learning the Associations of MITRE ATT&CK Adversarial Techniques.” The 2020 IEEE Conference on Communications and Network Security (CNS), pp. 1–9, 2020.

- Russo, L., F. Binaschi, and A. De Angelis. “Cybersecurity Exercises: Wargaming and Red Teaming.” Next Generation CERTs, pp. 44–59, IOS Press, 2019.

- Lam, D., R. Kuzma, K. McGee, S. Dooley, M. Laielli, M. Klaric, Y. Bulatov, and B. McCord. “xView: Objects in Context in Overhead Imagery.” arXiv preprint 1802.07856, 2018.

Biographies

David Noever is the chief scientist with PeopleTec. He has research experience with the National Aeronautics and Space Administration and the U.S. Department of Defense (DoD) in machine learning and data mining. He received his B.S. from Princeton University and his Ph.D. from Oxford University, as a Rhodes Scholar, in theoretical physics.

Forrest McKee is a senior researcher with PeopleTec. He has AI research experience with the DoD in object detection and reinforcement learning. He received his B.S. and M.S.E. in engineering from the University of Alabama, Huntsville.