Summary

With the growing integration of artificial intelligence (AI) in cybersecurity, this article investigates the economic principles of substitution and scale’s elasticity to evaluate their impact on the return on security investment. Recognizing the potential of AI technologies to substitute human labor and traditional cybersecurity mechanisms and the significance of cost ramifications of scaling AI solutions within cybersecurity frameworks, the study theoretically contributes to understanding the financial and operational dynamics of AI in cybersecurity. It provides valuable insights for cybersecurity practitioners in public and private sectors. Through this analysis, ways in which AI technologies can redefine economic outcomes in cybersecurity efforts are highlighted. Strategic recommendations are also offered for practitioners to optimize the economic efficiency and effectiveness of AI in cybersecurity, emphasizing a dynamic, nuanced approach to AI investment and deployment.

Introduction

In the rapidly evolving landscape of cybersecurity, the integration of artificial intelligence (AI) technologies represents a paradigm shift, offering unprecedented opportunities for enhancing security measures against complex cyberthreats [1, 2]. This transition, driven by the increasing sophistication of cyberattacks and extremely large and diverse collections of structured and unstructured data generated in digital ecosystems, necessitates a reevaluation of traditional cybersecurity frameworks. As such, governments recognize the imperative to adapt and innovate, leveraging AI to strengthen and improve defense mechanisms [3] and ensure efficient utilization of resources in public security domains [4].

Building on this foundation, the economic principles of elasticity of substitution and elasticity of scale emerge as pivotal factors in this context, providing a lens through which the impact of AI on cybersecurity can be assessed for operational efficiency and investment return. The elasticity of substitution explores the extent to which AI technologies can replace traditional human labor and non-AI cybersecurity measures. The elasticity of scale examines the cost implications of scaling AI solutions within cybersecurity infrastructures. This scaling is especially important in public sector contexts where budget constraints are ongoing issues and maximizing resource efficiency is crucial [5].

Given the strategic importance of cybersecurity investments, understanding these elasticities’ influence on the return on security investment (ROSI) is crucial for organizations, including government entities, navigating the digital transformation. This article aims to explore the interplay between the elasticity of substitution, the elasticity of scale, and ROSI in the context of AI in cybersecurity, framing an analysis that aids in strategic decision-making for investments in cybersecurity technologies. The core research question guiding this exploration is: How do the elasticities of substitution and scale influence ROSI? Addressing this question is significant for several reasons.

Firstly, it contributes to the literature at the intersection of economic theory and cybersecurity, offering an analytical framework for understanding the financial and operational dynamics of AI integration. Secondly, by examining the economic implications of deploying AI in cybersecurity, the study provides practical insights for businesses, policymakers, and cybersecurity professionals, facilitating informed decisions that balance cost, efficiency, and security outcomes. Lastly, the investigation into ROSI underscores the financial viability of AI cybersecurity solutions, a critical concern for stakeholders in an era of tightening budgets and escalating cybersecurity risks.

This article is structured as follows. The Theoretical Foundations section presents the theoretical foundation of this article by exploring the concepts of return on security investment along with the elasticities of substitution and scale. The Analysis section is dedicated to presenting an analysis. Following this, the Implications and Suggested Strategies for Practitioners section highlights the implications and proposes strategies for the cybersecurity practitioners. Finally, the Conclusions section concludes the article.

Theoretical Foundations

In digital ecosystems, AI does not just automate tasks typically reserved for low-skilled labor but is also involved in domains once thought to be exclusive to high-skilled labor through its innovative capabilities [6]. For instance, in cybersecurity, AI algorithms are not only replacing routine tasks like malware detection but are also stepping into roles requiring complex decision-making, such as identifying subtle patterns of sophisticated cyberattacks or automating the response to incidents in real-time. This leap signifies a shift from AI as a tool for automation to a comprehensive strategic asset capable of driving innovation in cybersecurity measures [7]. This transformative potential of AI in cybersecurity directly ties into the study’s examination of the elasticity of substitution and scale, highlighting the economic impacts of integrating AI into security strategies.

The elasticity of substitution emerges as a critical factor when considering the replacement of human labor and non-AI cybersecurity measures with AI-driven tools. This elasticity measures the ease with which AI technologies can be substituted for traditional security methods, influenced by the technological advancement of AI, its compatibility with existing security infrastructures, regulatory compliance requirements and accountability measures, and the dynamic nature of cyberthreats. The relative costs of labor and AI technologies play a significant role in this dynamic, where advancements in AI capabilities and labor market fluctuations can shift the balance, potentially making AI solutions more economically attractive [8]. Such cost shifts can accelerate or hinder the adoption of AI in cybersecurity, reflecting on the broader implications for security effectiveness and organizational resilience.

Parallelly, the elasticity of scale addresses how the expansion of AI in cybersecurity affects cost structures, focusing on the implications of scaling AI solutions for the overall economics of cybersecurity efforts. By their automated and digital nature, AI technologies present unique opportunities for economies of scale, where the marginal cost of cybersecurity operations could decrease as AI solutions are deployed more extensively. However, this optimistic view is balanced by considering possible diseconomies of scale, such as the added complexity and overhead that might accompany large-scale AI deployments, potentially eroding the cost benefits of scalability.

A pertinent real-world example that illustrates the elasticity of scale in AI-driven cybersecurity is the implementation of AI technologies to enhance software supply chain (SSC) security within the Defense Industrial Base (DIB). This approach, as detailed in the report published by the Cybersecurity & Information Systems Information Analysis Center (CSIAC) [9], leverages AI to automate threat intelligence processing and expedite cybersecurity risk management. As these AI systems scale across the defense industry’s complex infrastructure, they demonstrate economies of scale by spreading the development and operational costs over a larger network of military and government installations. For instance, AI-driven systems can provide continuous scanning and real-time threat assessment across various platforms, significantly reducing the marginal cost of enhancing security for each additional system component.

However, the scalability and cost-effectiveness of these AI-driven solutions can be tempered by diseconomies of scale as they grow. For example, as AI solutions are scaled up to protect SSCs across the DIB, which comprises various branches of the military with distinct operational environments, the complexity of ensuring compliance with the National Institute of Standards and Technology controls adds significant overhead. According to the CSIAC report [9], integrating and managing AI-driven threat assessment tools across different branches involves substantial costs related to system customization to adhere to specific security standards, training personnel to handle sophisticated AI tools, and updating systems to keep up with the latest security protocols. These complexities can lead to slower response times to emerging threats and increase the operational costs, thereby potentially diluting the initial cost benefits associated with scaling AI solutions in such a regulated and diverse environment.

This study aims to investigate the impact of elasticity of substitution and scale on the ROSI, given that ROSI is profoundly affected by the cost-effectiveness and efficiency of cybersecurity measures. By integrating the effects of substitution and scale, understanding how strategic AI integration can significantly enhance ROSI is explored. This metric has been extensively studied in the literature of cybersecurity economics [10, 11]. ROSI provides a quantitative measure of the financial value derived from implementing cybersecurity countermeasures [12], serving as a pivotal tool for assessing the economic viability of investments in cybersecurity technologies, including AI. One method to quantitatively calculate ROSI is as follows [13]:

![]() , (1)

, (1)

where:

- Cost Avoided (CA) includes potential losses from cybersecurity incidents that are prevented due to AI-enhanced security measures. It can also consider saved costs and efficiency gains, such as reduced downtime or faster threat detection and response times.

- Cost of Investment (CI) encompasses the total expenditure on AI technologies, including initial purchase, implementation, training, and ongoing maintenance costs.

Understanding the elasticity of substitution sheds light on the potential of AI to replace existing cybersecurity measures efficiently, potentially leading to a substantial reduction in CA due to risk mitigation and response capabilities. Concurrently, examining the elasticity of scale allows for an assessment of how the costs associated with AI-driven cybersecurity solutions evolve as these solutions are deployed at larger scales, affecting the overall ROSI calculation. Through this lens, the next section explores the complex interplay between these elasticates and ROSI, highlighting the nuanced ways in which AI technologies can redefine economic outcomes in cybersecurity efforts.

Analysis

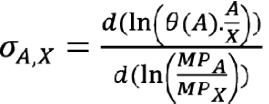

This section presents an analysis to elucidate the theoretical impact of elasticity of substitution and elasticity of scale on ROSI outcomes within the framework of AI-driven cybersecurity measures. Integrating these foundational economic theories to construct a comprehensive understanding of how the adaptability and scalability of AI technologies influence their economic viability and effectiveness in enhancing cybersecurity defenses is the goal. The elasticity of substitution is a measure of how easily one (economic) good or input can be substituted for another in response to changes in their relative prices or productivity [14]. The following widely applicable formula is adapted for calculating the elasticity of substitution [15] to better fit the unique context and specific requirements of the analysis:

. (2)

. (2)

In this equation, A and X represent the inputs of AI investment and traditional inputs (labor and non-AI technologies), respectively. θ(A) captures the efficiency or effectiveness of AI technology as a function of AI investment (A), reflecting how advancements in AI technology improve its contribution to cybersecurity effectiveness. Technological advancements in AI (θ(A)) can alter the marginal productivity of AI (MPA), potentially increasing its substitutability with traditional inputs (X). This enhanced substitutability, driven by AI’s technological advancements, affects both the cost avoided and the cost of investment, thereby influencing ROSI.

The elasticity of scale examines how the total output, in this case, cybersecurity effectiveness (Y), changes in response to a proportional increase in all inputs (A and X) [16]. This analysis is crucial for understanding the scalability of AI investments in cybersecurity and their potential to yield returns to scale. Identifying the conditions under which AI investments lead to enhanced scalability of cybersecurity operations can inform strategic decisions about the pace and extent of AI integration. The elasticity of scale, modified to account for external factors (ϕ), examines how the scalability of cybersecurity effectiveness is influenced by these factors as follows:

![]() . (3)

. (3)

This equation measures how the output (Y) changes in response to a proportional change in all inputs, scaled by a factor of λ. Moreover, it indicates that the overall returns to scale in cybersecurity effectiveness can be affected by external factors, which can either amplify or diminish the effectiveness of scaling up inputs, including AI. Ψ(ϕ) is a function that modifies the overall effectiveness of the cybersecurity system based on external factors. In this study, it is assumed that Ψ(ϕ) increases with positive external developments (e.g., effective AI regulations) and decreases with negative developments (e.g., sophisticated cyberthreats).

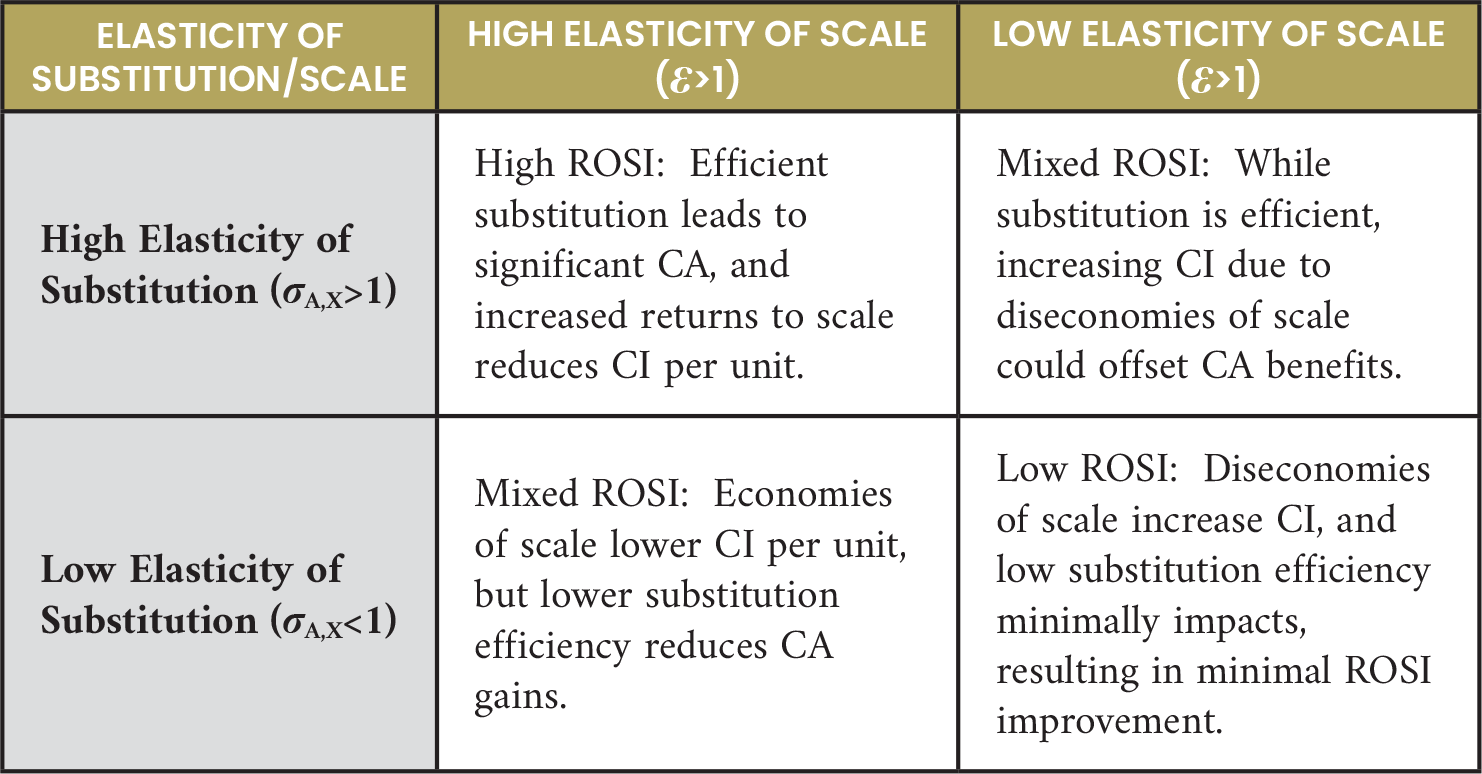

These two refined metrics, encompassing technological advancements in AI (θ(A)) and the role of external factors (ϕ), delineate four distinct scenarios that significantly influence the ROSI in the domain of AI-driven cybersecurity. Each scenario represents a unique combination of the elasticity of substitution and scale, providing a nuanced understanding of how AI’s integration into cybersecurity strategies can be optimized for economic efficiency and effectiveness, as follows:

1. High Elasticity of Substitution (σA,X)>1) With High Elasticity of Scale (ε>1)

This scenario signifies an ideal state where technological advancements in AI not only enhance its substitutability with traditional cybersecurity measures but also facilitate economies of scale as AI solutions are expanded. The dual presence of a high σA,X due to significant θ(A) improvements, alongside a favorable ε influenced by positive external factors (ϕ), suggests an optimal environment for AI investments, yielding substantial improvements in ROSI. This combination reflects a scenario where AI’s deployment maximizes cost avoidance and minimizes investment costs, presenting a compelling case for aggressive AI integration in cybersecurity frameworks.

2. High Elasticity of Substitution (σA,X)>1) With Low Elasticity of Scale (ε<1)

In this scenario, while AI exhibits strong substitutability due to advancements (θ(A)), scaling AI solutions encounters challenges, possibly due to negative externalities (ϕ) that diminish the returns to scale (ε<1). This juxtaposition leads to mixed outcomes for ROSI, where the benefits of substituting AI for traditional measures may be partially offset by the increased costs or diminished effectiveness associated with scaling. Strategic considerations must be employed to navigate this landscape, balancing the push for substitution with careful scaling strategies.

3. Low Elasticity of Substitution (σA,X)<1) With High Elasticity of Scale (ε>1)

Here, AI’s technological advancements may not sufficiently enhance its substitutability, possibly due to limitations in AI’s applicability or integration complexities. However, positive external factors support economies of scale, suggesting that while AI may not replace traditional measures as effectively, scaling up AI deployments is economically beneficial. This scenario requires a focused approach to leveraging the scalability of AI to improve ROSI, possibly by enhancing AI capabilities or finding niches where AI’s integration delivers clear benefits.

4. Low Elasticity of Substitution (σA,X)<1) With Low Elasticity of Scale (ε<1)

Representing the most challenging scenario, this combination arises when AI’s technological advancements fail to significantly increase its substitutability and external factors lead to diseconomies of scale. The convergence of these factors results in the lowest potential for ROSI improvement, indicating a need for a reevaluation of AI investment strategies. Organizations in this quadrant must critically assess their AI deployments, focusing on overcoming barriers to AI effectiveness and scalability to realize positive economic outcomes.

Through these scenarios, Table 1 represents a comprehensive matrix that captures the multifaceted impacts of AI’s elasticity of substitution and scale on ROSI in cybersecurity.

Table 1. The Interplay Between Elasticities of Substitution and Scale on Return on Cybersecurity Investment

Investigating the details of each scenario—identifying what factors make AI more scalable or substitutable to determine which scenario is more likely—is beyond the scope of this article and requires a specific, detailed analysis tailored to the distinct needs of each organization and their cybersecurity context. Nonetheless, based on this theoretical foundation, the next section draws practical implications of elasticity of substitution and scale on the economics of cybersecurity, guiding organizations in navigating the complexities of AI integration to optimize security investments and operational effectiveness.

Implications and Suggested Strategies for Practitioners

Integrating the insights from the analysis of elasticity of substitution and elasticity of scale on ROSI, the practical implications can be synthesized into a cohesive strategy for cybersecurity practitioners. This strategy revolves around optimizing the economic efficiency and effectiveness of AI-driven cybersecurity measures by understanding and acting upon the interplay between these elasticities and ROSI. Figure 1 presents a consolidated action plan that reflects these insights.

Figure 1. Consolidated Action Plan for Cybersecurity Practitioners (Source: Visual Generation, 0721-Team, and Vir Leguizamón [Canva]).

These strategies together enable cybersecurity practitioners to construct a comprehensive approach that maximizes the economic efficiency and effectiveness of AI in cybersecurity. This approach acknowledges the complexity of the interplay between the elasticity of substitution and scale, guiding practitioners in making informed decisions that optimize ROSI in an ever-evolving cybersecurity landscape.

Conclusions

This article has provided a thorough analysis of the intricate dynamics between the elasticity of substitution and scale of AI technologies and their consequential impact on ROSI within the realm of cybersecurity. Exploration revealed that the strategic integration of AI in cybersecurity is not merely a technological upgrade but a complex economic decision that hinges on understanding and leveraging the elastic properties of AI. The ideal scenario—characterized by high elasticity of substitution and scale—underscores the potential for AI to deliver substantial improvements in ROSI through cost-effective substitution and scalable deployment. However, the mixed and challenging scenarios present a call to action for organizations in public and private sectors to navigate the intricacies of AI deployment with agility and foresight, addressing barriers to scalability and enhancing substitutability where necessary. The interrelated nature of substitution and scale elasticities demands a dynamic, nuanced approach to AI investment and deployment in cybersecurity. Organizations must adopt a continuous evaluation mindset, recalibrating strategies in response to technological evolutions and shifting threat landscapes to harness AI’s full economic and security potential. Moreover, this article calls for empirical evidence and case studies that illustrate the real-world applications and implications of these elasticities in cybersecurity strategies.

References

- Kaur, R., D. Gabrijelčič, and T. Klobučar. “Artificial Intelligence for Cybersecurity: Literature Review and Future Research Directions.” Information Fusion, 2023.

- Truong, T. C., I. Zelinka, J. Plucar, M. Čandík, and V. Šulc. “Artificial Intelligence and Cybersecurity: Past, Presence, and Future.” Artificial Intelligence and Evolutionary Computations in Engineering Systems, 2020.

- Mariarosaria, T., T. McCutcheon, and L. Floridi. “Trusting Artificial Intelligence in Cybersecurity Is a double-Edged Sword.” Nature Machine Intelligence, pp. 557–560, 2019.

- Mikalef, P., L. Kristina, S. Cindy, Y. Maija, F. Siw Olsen, T. Hans Yngvar, G. Manjul, and N. Bjoern. “Enabling AI Capabilities in Government Agencies: A Study of Determinants for European Municipalities.” Government Information Quarterly, 2022.

- Ponemon Institute. “State of Cybersecurity in Local, State & Federal Government,” 2015.

- Aghion, P., B. F. Jones, and C. I. Jones. Artificial Intelligence and Economic Growth. Cambridge: National Bureau of Economic Research, 2017.

- Lu, Y., and Y. Zhou. “A Review on the Economics of Artificial Intelligence.” Journal of Economic Surveys, 2021.

- Hakami, N. “Navigating the Microeconomic Landscape of Artificial Intelligence: A Scoping Review.” Migration Letters, 2023.

- Rahman, A. “Applications of Artificial Intelligence (AI) for Protecting Software Supply Chains (SSCs) in the Defense Industrial Base (DIB).” Cybersecurity & Information Systems Information Analysis Center, 2024.

- Gordon, L. A., and M. P. Loeb. “Return on Information Security Investments: Myths vs. Realities.” Strategic Finance, 2002.

- Sonnenreich, W., J. Albanese, and B. Stout. “Return on Security Investment (ROSI) – A Practical Quantitative Model.” Journal of Research and Practice in IT, 2006.

- Kianpour, M., S. J. Kowalski, and H. Øverby. “Systematically Understanding Cybersecurity Economics: A Survey.” Sustainability, 2021.

- Bistarelli, S., F. Fioravanti, P. Peretti, and F. Santini. “Evaluation of complex Security Scenarios Using Defense Trees and Economic Indexes.” Journal of Experimental & Theoretical Artificial Intelligence, 2012.

- M. Knoblach, M., and F. Stöckl. “What Determines the Elasticity of Substitution Between Capital and Labor? A Literature Review.” Journal of Economic Surveys, 2020.

- Robinson, J. The Economics of Imperfect Competition. London: Springer, 1933.

- Perloff, J. Microeconomics. Pearson Education, 2009.

Biography

Mazaher Kianpour is a researcher with a deep interest in how technology and economics intersect, especially in cybersecurity and its economic and policy challenges. He is currently involved in research at RISE Research Institutes of Sweden and serves as a postdoctoral researcher at the Norwegian University of Science and Technology (NTNU), concentrating on the regulatory risks connected to cybersecurity. Dr. Kianpour holds a Ph.D. in information security from NTNU.